The lathe & the punch card

Parables on productivity from the 19th century

A few weeks ago, Model Evaluation & Threat Research (METR), a Silicon Valley "AI safety" organization, published the results of a randomized controlled trial that suggest large language models make programmers less productive. When a paper with a well-pedigreed source, focus on programming, and counterintuitive finding is tossed out into the maelstrom that is any conversation about AI and work these days, it's sure to get a reaction—and it did.

The paper (and response) concerned me as well, although for a different reason. Applying my normal technology lens, I do not believe it is likely that highly-skilled workers will be wholly replaced by AI. But I do fret about what might become of already-overworked professionals, such as lawyers and programmers, who adopt AI with a hope and a prayer that it will somehow make them work less.

Because for all the bluster that accompanies these papers, no one stops to ask: What exactly does it mean to be "productive?"

1.

To get there, it's worth rehashing the details of the METR paper, because—my concerns about the faulty premise aside—it is a rare serious effort to evaluate the impact of AI on expert-level job performance. Such studies are hard to come by in law, and those that have been performed either use law students or (mis)use billing metrics to draw grand conclusions about relative performance.

The METR authors evaluate the work of programmers who contribute to open-source GitHub repositories. In plain English:

GitHub is a website used by programmers based off "git," a widely-used version control software that allows developers to make changes in a codebase without breaking the underlying production software. By linking a project stored in git to GitHub, developers can collaborate on a project remotely and asynchronously through "repositories." The feature of interest in this paper is GitHub's "issues" tab, which gives every repository contributor an up-to-date list of problems that should be addressed.

"Open-source" means that the repository code is available for anyone to view. For example, Google's code for developing apps on Android software—which is open source—is freely available on GitHub.

In theory, although anyone can contribute to an open-source project, large open-source repositories typically support software that many people (especially developers) use. Thus, in practice, the developers who contribute to these projects are highly experienced, technically capable, and invested in the output of that repository.

The authors of the paper recruited study candidates by paying contributors to prominent, high-quality open-source repositories. The study participants then submitted lists of open issues in the repositories they worked on. Using a random process, the researchers selected two issues for the developers to solve, with the provision that only one issue could be solved using AI for support. The developers were asked to preregister their estimates of how long each task might take without AI, and then the actual time was recorded.

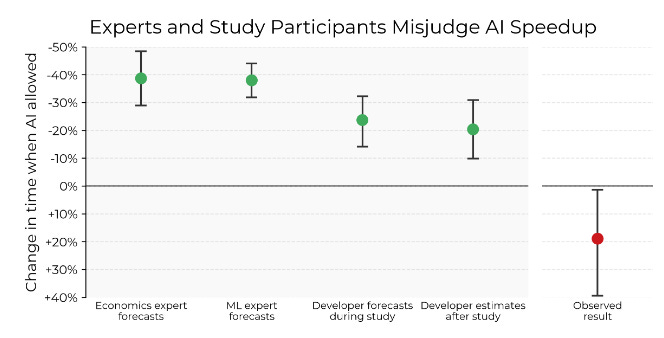

The "surprising" result appears in this chart, which shows that developers took longer on the AI task, in spite of their belief that they had performed the AI task more quickly.

If you want to go deeper, I suggest this blog post from Ben Recht, a machine learning researcher at Berkeley. For our purposes, the important thing to understand (which gets missed in many summaries) is that the headline result—the observed "slowdown"—is not measured relative to an objective, experimental observation of performing the same task under both conditions, but against the developer's subjective expectation of how long they thought it would take to perform the task.

I commend the authors for looking beyond stylized tasks to understand how AI might be used by knowledge workers on real tasks they face in their jobs. But I hesitate to describe this, as Steve Newman did, as a "proper scientific trial" of productivity. Not because I think a better experiment could have been designed, but because the premise is rotten. We are never going to prove whether a technology makes a skilled knowledge worker more productive, and attempting to do so only distorts our understanding of why we should adopt a technology in the first place.

2.

How did we get here? It's not a stretch to argue that it's all because of one man—Frederick Winslow Taylor—and his big idea: The "Principles of Scientific Management." Born in 1856, Taylor grew up alongside the Industrial Revolution, but was not of it. Born into a wealthy Philadelphia family, he attended Phillips Exeter Academy—the preeminent feeder school to Harvard—and passed his Harvard entrance exam, which largely tested his ability to read Greek and Latin, with honors. But, due to an undiagnosed astigmatism, his eyesight was failing and he suffered debilitating headaches, forcing him to drop out of Exeter and forego Harvard.1

Instead, in 1874, he entered into a four-year patternmaking apprenticeship at a Philadelphia machine shop. Afterwards, he was hired—through family connections—to work on the shop floor at Philadelphia's Midvale Steel Works, which produced steel tires to wrap around the wheels of locomotives, much as the rubber tire wraps around the wheel of a car. Taylor was quickly promoted to foreman, leading a "gang" of machinists he'd previously worked alongside.

At the time, machinists at Midvale and elsewhere were paid on a "per-piece" rate, so that the worker could earn more if their output was greater. However, wage and hour laws did not exist in the 1870s, and managers would frequently and arbitrarily reduce the piece rate for workers who produced too many pieces. Because of this de facto maximum wage, the machinists Taylor worked with (and then managed) had optimized strategic indolence; they worked enough to get the output they needed to make their pay without threatening the boss's pocketbook. With no incentive to work harder, the machinists also did not approach their work systematically, instead using rules of thumb to determine, for example, the maximum speed at which a lathe could be run without wearing down a machine tool.

As Taylor learned the ways of the machine shop, he was convinced that the work could be done better, and persuaded his boss to fund a scientific study to determine the optimal rate for grinding steel so that output could be maximized while preserving the integrity of the company's machines. This "study" consumed the rest of Taylor's life, as he replicated his modest experiment across all of American industry, breaking every factory job into its constituent parts and designing the most efficient method for performing it. (In an oft-mentioned anecdote, Taylor (in)famously ascertained the ideal method for shoveling dirt.)

Because he reduced industrial craft to a mechanized process that could be taught to a worker of little skill, workers despised Taylor. Because he proposed that the most efficient workers be rewarded with the most pay to maximize output, factory owners shunned him. But despite this criticism, Taylor's influence was immediate and widespread. His acolytes included 20th century progressive lawyers such as Louis Brandeis, attorney, Supreme Court justice, and champion of the worker, who cited Taylor's studies in his briefs to argue on behalf of labor, and, as we have seen, Reginald Heber Smith, who created the billable hour for law offices through the application of Taylorist principles.

Peter Drucker, the prolific 20th century management theorist, argues that Taylor saw what the famous economists of the Industrial Revolution (Adam Smith, Marx, and others) before him did not: That among land, labor, and capital, labor can be optimized for productivity just as well as land and capital. More prosaically, workers do not come to the factory with fixed skills that can be applied for longer hours, but they can actually improve their skill through technique, just new scientific discoveries can make farmland more fertile or make a piece of machinery more efficient. It is through Taylor's "application of knowledge to work" that Drucker (hyperbolically) attributes "all of the economic and social gains of the 20th century."

Yet in America, those gains came in the first half of the 20th century, through the literal application of Taylor's ideas on the factory floor. In the second half, as America entered the computer age and knowledge work grew at the expense of manual labor, Taylorism was so deeply ingrained in work culture that no one thought to ask whether his ideas made sense in an office. Writing in the early 1990s, Drucker argued that "[i]n terms of actual work on knowledge-worker productivity, we will be in the year 2000 roughly where we were in the year 1900 in terms of the productivity of the manual worker." In his 2024 book, Slow Productivity, Cal Newport reports that, in the intervening quarter-century, we have not advanced any further. As part of his research for the book, Newport surveyed readers of his blog, Newport received almost 700 responses from knowledge workers to a survey which included the question: "In your particular professional field, how would most people define 'productivity,' or 'being productive.'"

Newport wrote:

None of these answers included specific goals to meet, or performance measures that could differentiate between doing a job well versus badly. When quantity was mentioned, it tended to be in the general sense that more is always better...As I read through more of my surveys, an unsettling revelation began to emerge: for all of our complaining about the term, knowledge workers have no agreed-upon definition of what "productivity" even means.

3.

As Frederick Taylor methodically transformed the lives of front-line workers, another child of the Gilded Age, Herman Hollerith, was engaged in his own quest to revolutionize back-office labor. After graduating from Columbia engineering school at age 19, Hollerith engaged in his own version of an apprenticeship, joining one of his professors as an advisor to the 1880 Census, where he incredulously watched hundreds of people tabulate large statistical tables by hand.

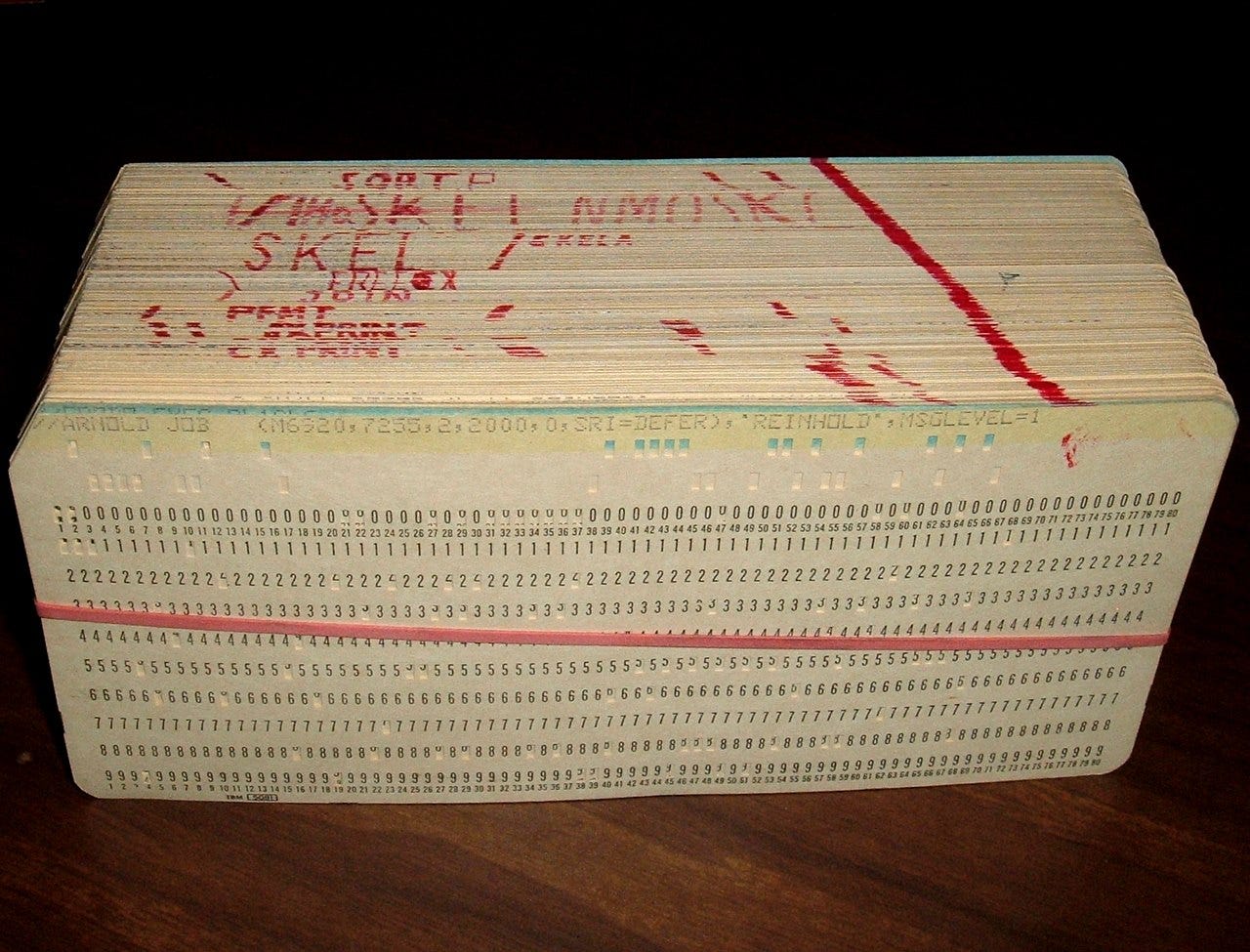

Afterward, Hollerith joined the engineering faculty at MIT, but he continued to ruminate on the idea of a "census machine" to speed up the ordinary data tabulation required in the effort to gather statistics about the entire U.S. population. Familiar with prior inventions such as the Jacquard loom, which used punched cards to mechanically produce complex weaving patterns, Hollerith had a eureka moment (or so the story goes) when he rode the train one day and observed the conductor using a hole punch to efficiently record information on passenger tickets. Riffing on this idea, Hollerith designed a machine that closed electrical circuits via holes punched at standardized locations in specially designed cards. A "tabulator" connected to the machine could then store a running count of statistics from each individual card

Hollerith secured a patent for the first punch card machine in 1884, and the federal government awarded him a contract to use it for the 1890 Census. The new machine performed brilliantly. Instead of office clerks creating statistical tables by hand based on census forms, each individual record could be reduced into a series of punches that were then fed into one of Hollerith's machine. Although the U.S. population had grown by 25 percent since 1880, by 1890, the machine performed the tabulations more quickly and at much lower cost (estimated as much as $5 million less) than the clerks Hollerith had observed 10 years prior.

Hollerith continued improving his machine in the 1890's, but the Census decided to develop its own machine for the 1900 census. Without the government as a revenue source, Hollerith formed the "Tabulating Machine Company" and turned his attention to railroads, which employed massive accounting departments to ingest the raw data being produced at operations around the country to calculate the "total cost per ton-mile," the number used to set rates. As with the Census, this work was all done by hand until the 1890s.

The usefulness of a machine that could automate these calculations was obvious, and Hollerith faced competition from other inventors and the railroads themselves, some of which decided to build their own tabulators internally. His business was eventually acquired by a holding company, the "Computing-Tabulating-Recording Company" (C-T-R) in 1911. Under the leadership of general manager Thomas J. Watson, the company squelched any competition and had established a dominant market position by the 1920s, when Watson renamed the firm "International Business Machines Corporation," or IBM.

4.

Taylor changed business by enabling the manufacture of more proverbial widgets at lower cost. Hollerith's punch card machines, along with other office technologies such as calculators and typewriters, enabled business to effectively keep track of all those new widgets through more effective recordkeeping. Significantly, the machines also allowed back offices to fashion new information "products" that would have been prohibitively difficult to produce (or inconceivable) before a punch card.

For example, during the period between roughly 1890 to 1930:

Accountants at large companies (most notably DuPont) developed measurements (e.g., "return on investment") that are now commonplace but were previously impractical to calculate, revolutionizing the management of large companies.

Banks, no longer having to employ clerks to record all transactions by hand, created new, lower-cost products, such as fee-based checking accounts.

Although life insurance predated punch card machines, this industry was an early adopter of punch-card technology, and some companies (notably Prudential) developed their own machines to compete with Hollerith; overall, the growth in data processing correlated with massive growth in the availability and variety of insurance products.

More information greased the wheels of capitalism. Thus, although Hollerith expressly designed the punch card machine to automate the clerical work he observed in the 1880 Census office, these innovations caused a rapid increase in the number of clerical workers from the turn of the century through the Great Depression. As the sociologist James Beniger recorded in his 1986 book, The Control Revolution: Technological and Economic Origins of the Information Society:

Bureaucratic growth during the period appears even more striking when occupational categories are refined to include only the most generalized office workers (the managers category cited above includes public officials and proprietors; “clerical” includes a wide range of kindred workers). The number of stenographers, typists, and secretaries increased 189 percent during 1900-1910, 103 percent in the 1910s, 40 percent in the 1920s, and 11 percent in the 1930s. Bookkeepers and cashiers increased 93 percent during 1900-1910, 38 percent in the 1910s, 20 percent in the 1920s (they decreased 2 percent over the 1930s). Office machine operators and related clerical workers increased 178 percent during 1900-1910, 102 percent in the 1910s, 30 percent in the 1920s, 31 percent in the 1930s. Accountants and auditors increased 70 percent during 1900-1910, 203 percent in the 1910s, 63 percent in the 1920s, 24 percent in the 1930s. Together, these four categories of office workers more than quadrupled their proportion of the total civilian labor force—from 2.1 to 8.6 percent—between 1900 and 1940 (U.S. Bureau of the Census 1975, pp. 140—141).

The allusion to "office machine operators" hints at another source of employment growth beyond what we think of as traditional "clerical" jobs: The creation of new jobs devoted to the machines themselves. Of course, IBM engineers and tradespeople actually had to build the machines. IBM customers rented the machines from IBM and bought physical punch cards by the tens of thousands. Because IBM still owned the machines and wanted to keep customers, it hired and trained salespeople skilled enough to assist customers with ongoing questions about maintenance and operations.

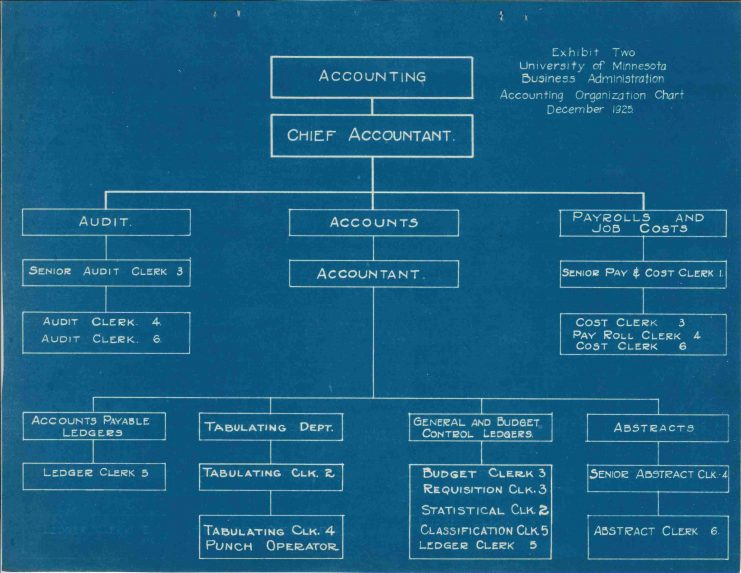

IBM's customers also inevitably hired their own staff to operate the machine. For example, technology historian Arthur Norberg writes that Aetna Life Insurance created its own "Hollerith Departments" inside of each subdivision that operated a machine. And in this detailed review of the school's magazine archives, the University of Minnesota library provides an organizational chart of the accounting department which shows that, by 1925, the University accounting department had its own "tabulating department" to handle the machines needed to support the work of the accounting department, which likely grew in size to accommodate the expanded volume of information.

In the 1950s, IBM turned its attention to the new market for computers, which improved upon the existing punch card machines with the invention of programmable computer chips. Previously, if a punch card clerk wanted to perform a different operation on her machine, she would have to rewire it. With the computer, the machine itself was a blank slate and you could now take a stack of punch cards and write out a custom routine ("software") that the machine would then carry out.

With this change, a whole new job category emerged: That of "programmers" or "data processors" who had the technical expertise to write these programs and operate the machines. As these skills became more complex and specialized, and the data produced by these machines became ever more voluminous, new interstitial roles were created, such as the "systems man," a 1950s and 60s precursor to the business analysts and database engineers of the 1980s, and the data analysts and data engineers of today. And in the age of the Internet, as computers get ever smaller and cheaper, new software is constantly invented, such that a simple web application in 2025 can power a completely legitimate and profitable business in a way that would be incomprehensible even in 1990.

Although many do not know his name, we can draw a straight line from Frederick Taylor to the automation and offshoring of American manufacturing, a factor always present in our national consciousness. Whatever we think of his contribution, Taylor's genius was in his ability to read into the muddy abstraction of manual labor a system that could be studied, quantified, and monetized. Taylor and his contemporaries never talked of "productivity"—the word wasn't in their lexicon—but we can fairly attribute the idea of productivity to him and his ideas.

If we take a broad definition to include punch card machines, computers have been inside business at least as long as Taylor's ideas. But we have not found the computing equivalent of Taylor standing at the lathe. Maybe this is impossible, and we may well be better off feeling our way through abstraction than ruthlessly measuring yet another aspect of our lives. But as the METR paper shows, whether it makes sense or not, Taylorism is so deeply rooted that we are hard-pressed to think of work anything other than a series of task to be counted and made ever more productive. And thus, like Charlie Brown preparing to kick the football, we anticipate each innovation as the solution to all our "productivity" problems, only to be confused when we just get more work instead.

I pulled most of the biographical details from The One Best Way, a very good 1997 biography of Taylor.